LangChain is a framework that revolves around large language models (LLMs). It enables the development of applications using LLMs for various purposes like chatbots, generative question-answering, summarization, and more.

The framework allows you to “chain” together different components to create advanced use cases with LLMs. These components include prompt templates, LLMs themselves (such as GPT-3 or BLOOM), agents that use LLMs to make decisions, and memory for short-term or long-term storage.

Why LangChain?

The key advantages of using LangChain are:

- Components: LangChain offers modular abstractions and implementations for working with LLMs. These components are designed to be user-friendly, even if you’re not utilizing the entire LangChain framework.

- Use-Case Specific Chains: Chains in LangChain are configurations of components specifically designed to accomplish particular use cases. These chains provide a higher-level interface for easily starting with a specific application and are customizable according to your needs.

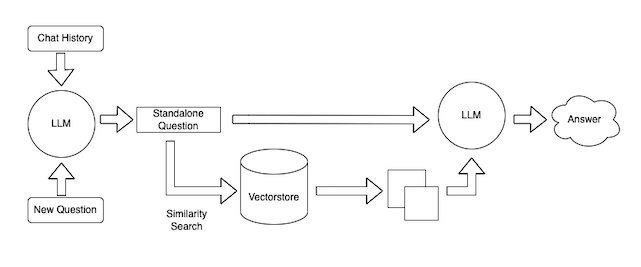

LangChain simplifies the process of working with LLMs by breaking down text into chunks or summaries, embedding them in a vector space, and searching for similar chunks when questions are asked. This approach allows for preprocessing, real-time collection, and interaction with the LLM. It’s useful not only for question-answering but also for scenarios like code and semantic search.

The LangChain framework consists of several modules:

- Schema: This module includes interfaces and base classes used throughout the library.

- Models: It provides integrations with various LLMs, chat models, and embedding models.

- Prompts: This module handles prompt templates and functionality related to working with prompts, including output parsers and example selectors.

- Indexes: It offers patterns and functionality for working with your own data, preparing it for interaction with language models. This includes document loaders, vector stores, text splitters, and retrievers.

- Memory: Memory is the concept of persisting state between calls of a chain or agent. LangChain provides a standard interface, various memory implementations, and examples of chains or agents that utilize memory.

- Chains: Chains involve sequences of calls to LLMs or other utilities, going beyond a single LLM call. LangChain provides a standard interface for chains, integrations with other tools, and end-to-end chains for common applications.

- Agents: Agents make decisions using LLMs, taking actions based on observations and repeating the process. LangChain provides a standard interface for agents, a selection of agents to choose from, and examples of end-to-end agents.

For more detailed information about these modules and how to use them, you can refer to the documentation and API references provided by LangChain.

Let’s get started

Reference

- LangChain Github hwchase17/langchain: ⚡ Building applications with LLMs through composability ⚡ (github.com)

- https://python.langchain.com/en/latest/

- https://python.langchain.com/en/latest/modules/models/llms/integrations.html

- https://www.deeplearning.ai/short-courses/