LangChain is a conversational AI framework that provides memory modules to help bots understand the context of a conversation. One of these modules is the Entity Memory, a more complex type of memory that extracts and summarizes entities from the conversation.

The Entity Memory uses the LangChain Language Model (LLM) to predict and extract entities from the conversation. The extracted entities are then stored in an entity store, which can be either in-memory or Redis-backed. This type of memory is useful when you want to extract and store specific information from the conversation.

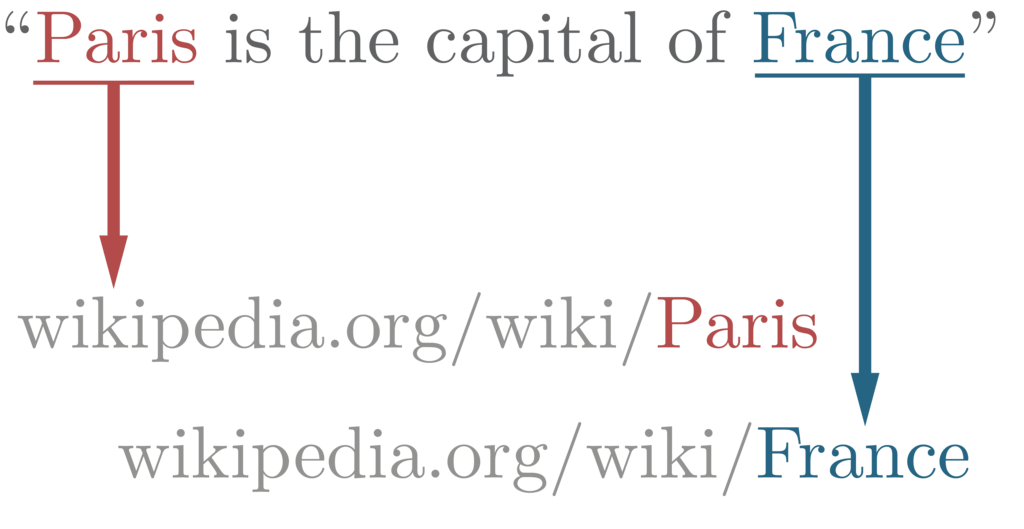

Named-entity recognition (NER) is a subtask of NLP information extraction that seeks to locate and classify named entities mentioned in unstructured text into pre-defined categories such as person names, organizations, locations, medical codes, time expressions, quantities, monetary values, percentages, etc. The Entity Memory uses NER to extract entities from the conversation and store them in the entity store.

LangChain primarily uses lists and dictionaries to store the memory. The Entity Memory remembers given facts about specific entities in a conversation and builds up its knowledge about that entity over time. The context of the conversation is added to prompts, making the conversation more natural and engaging for the user.

In conclusion, LangChain Entity Memory is a powerful tool for conversational AI. It provides a way to extract and store specific information from the conversation, making the conversation more engaging and natural for the user.